This topic is a working area to start building of specifications of the

OiDB portal in a dynamic way.

- Root elements comes from the user requirement document

- Items are organized by hierarchical topics

- Each leaf should be managed in a ticket

- to centralize comments and converge to its better description.

- to follow priority and status of development

- This topic is the glue to present a synthesys of our work (will probably be split up depending on the size)

- Group meetings will follow general activity and manage development plan according priorities and general constraints (conference, collaborations, etc...)

Depending on the general understanding and developpment tracking, we may freeze the specifications in a more formal document.

1. Database structure

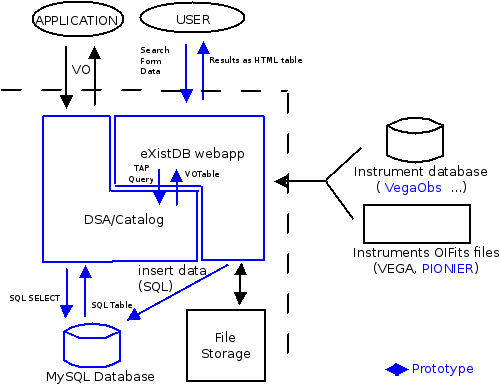

1.1 Current architecture

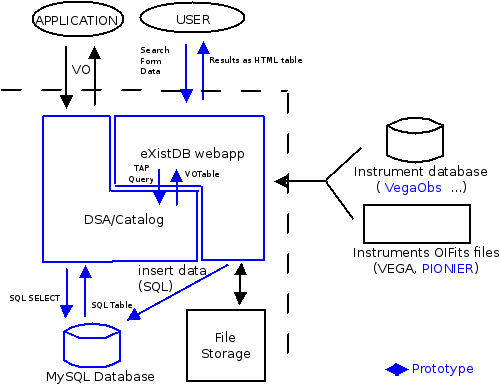

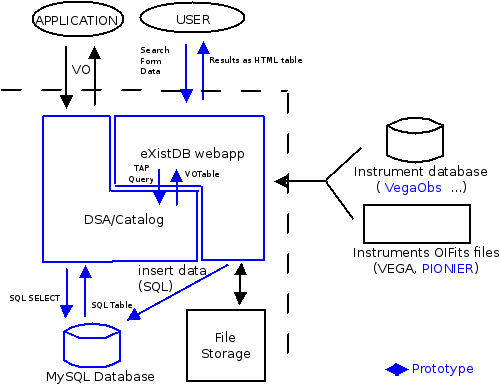

The webapp accepts user requests from the form on the search page. Currently the search interface is limited to a set of pre-defined filters that are not combinable.

The Web portal makes requests to DSA/Catalog (a layer on top of existing database providing IVOA search capabilities) that serialize data to VOTable.

The webapp parses the response (XML VOTable) and formats data as HTML tables for rendering.

When submitting new data, the webapp directly builds and executes SQL queries on the database.

1.2 Data Model

1.2.1 The ObsCore datamodel

The storage of the data will follow the IVOA's Observation Data Model Core (

ObsCore

). Most of the domain specific data have been mapped to this data model (see

User requirements for an Optical interferometry database). Other fields still need a definition:

s_fov,

s_region,

s_resolution,

t_resolution.

Optional elements of the data model are used such as curation metadata for identification of collections and publications in relation with the data. It is also used in managing the availability of the data (public or private data and release of the data on a set date).

| Ticket# |

Description |

% Done |

553 |

Conform to the ObsCore Data Model for registration as a service in VO |

0 |

554 |

Provide a unique identifier for each data |

0 |

555 |

Define and document extensions to ObsCore Data Model |

0 |

556 |

Accept private data and enforce access restriction to data |

0 |

As the application makes reference to external files that can be deleted or modified, existence and update status have to be tested and analyzed again by the application from time to time.

Someone needs to check if there is no better datamodel than ObsCore and what possbilities it really offers (i.e. what is the real operability gain we have with

ObsCore compared to other datamodels).

1.2.2 The metadata quality

1) Target.name : should be resolved by simbad or be identified with its coordinates.

1.3 Metadata extraction

1.3.1 OIFits files

Specific fields are extracted from submitted OIFits files. The base model is extended with fields for counts of the number of measurements (VIS, VIS2 and T3) and the number of channels.

An observation from an OIFits file is defined as a row per target name (OI_TARGET row) and per instrument used (OI_WAVELENGTH table, INSNAME keyword): if measurements for a single target are made with two instruments, the result two rows with different instrument names.

1.3.2 Other data

The application may also accept files in different formats (not OIFits) through tools interfacing with the application.

Metadata from other sources may not have all fields filled, depending on the calibration level, data availability, and source database format.

See

OiDbVega for a description of the import of VEGA data from the

VegaObs Web Service.

1.4 Granularity

The following definition of the granularity for the database has been adopted. One entry will thus represent an interferometric observation of one science object (if level 2 or 3, one science object + night calibrators for the potential case of L1 data, TBC) obtained at one location (interferometer) with one instrument mode during at most 24 hours (= 1 MJD).

1.5 Hosting

The project can setup a file repository, decoupled from the main application for L2 and L3 data that are not hosted elsewhere.

This repository will host OIFits files (L2, L3) or any other source data and serve the files for the results rows.

2. Database feeding

2.1 Data submission

Depending on the calibration level of the data, format and origin, submission process is different.

At the moment,

OiDB supports the following three submission processes:

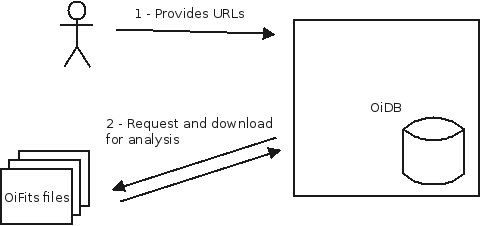

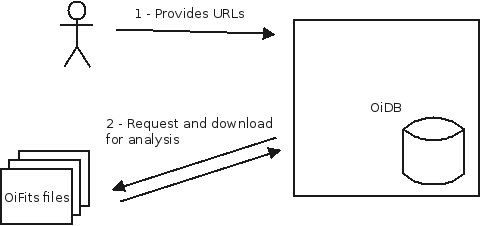

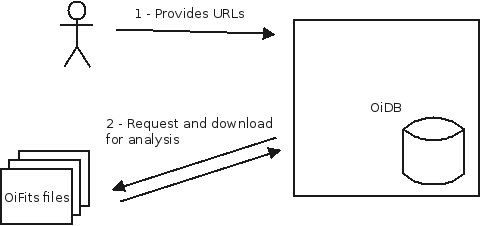

- OIFits file submission: the user provides URLs to OIFits files to analyze. The files are then downloaded by OiDB for data extraction. A copy of the file can be cached locally for further analysis. Similarly the user can request the import of a specific collection of files associated with a published paper. For specific sources, an automatic discovery process of files can be implemented.

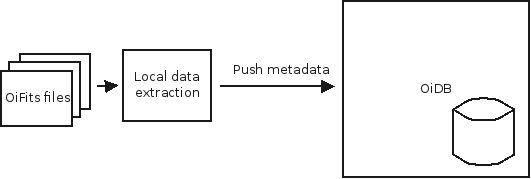

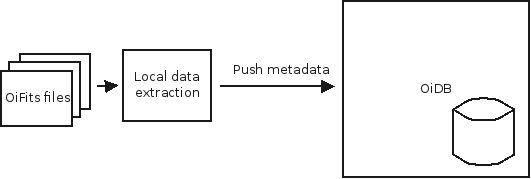

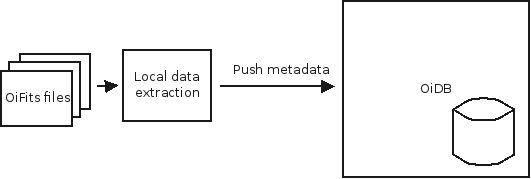

- Metadata submission (push): the user send only the metadata for the observations. The original data are not cached by OiDB.

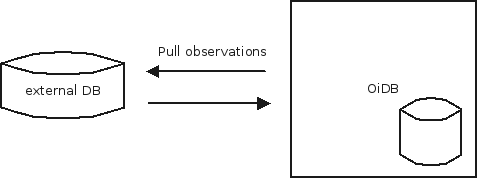

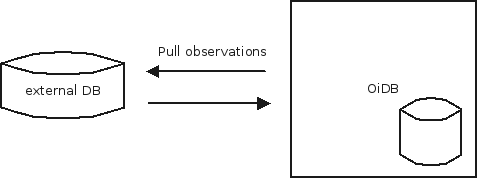

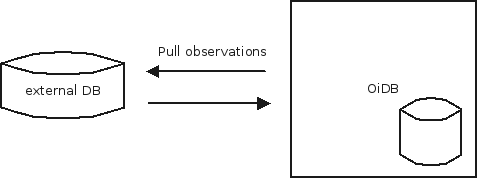

- External database (pull): OiDB queries an external service and applies a predefined, source-dependant set of rules to extract data. This analysis may require a privileged access on the service. Ultimately, a synchronization mechanisms can be defined to automatically update the metadata set.

2.1.1 L0 data

Data submission for L0 data is based on submission of an XML file (format to be defined) by the user or on scripts regularly downloading observations from the instrument data base.

2.1.2 Individual L2/L3 data

OIFits files are analyzed and metadata extracted using

OIFitsViewer from

oitools (JMMC). Metadata missing from the OIFits file are requested through a Web form on the submission page: collection name, bibliographic reference, data rights...

An embargo period is offered as an option.

Alternatively, the user can submit an XML file from on batch process on the client side. The metadata are extracted, formatted according to the file format (to be defined) and submitted for inclusion in the database. The PIONIER data in the current prototype are imported

using this method (see also ticket

#550

below).

2.1.3. Collection (L3 data)

OIFits files associated with a publication are automatically retrieved from the associated catalog (

VizieR Service

). The data are associated with the specific publication and the author, optionnaly using other web services to access the information.

2.2 Data validation

For consistency of the dataset, data has to be validated as a first level of quality assertion.

Batch submission can be validated using an XML Schema.

Submission based on OIFits file can be validated by the validator from

oitools (see the

checkReport element) followed by an XML Schema validation specific to

OiDB.

Data failing the validation are rejected and the submitter is notified through the web page or the return value/message of the submit endpoint.

An action (ticket) would be to gather ~20 OIFITS from different instruments and different modes and see how they are validated.

Rules on the instrument mode: for any granule, the correspondance with the official liste of modes (ASPRO 2 pages) is mandatory for L1/L2 and optionnal for L3.

Rules on the coordinates: for L3 data, coordinates are not critical if a bibcode is given but a warning is indicated if they are not valid. For L1,L2, coordinates MUST be valid.

If coordinates are null, the oifits submission is rejected. The name of the object is informative

2.2.1 Duplicates

Individual L2 data: when a submitted granule has some metadata which are very similar to an already existing granule, a jusitification is imperatively requested: what is the difference compared to the existing granule, does the new granule have to replace the previous one?

For L3 data: if granules have the same bibcode, the following metadata shall be checked at the submission: mjd time (t_min and t_max) to within a couple of seconds, s_ra and s_dec to within a few seconds, wavelength (em_min and em_max) to within a few percents, instrument (is it the same ?)

For L2 automatic data: a procedure is still to be defined (ticket J.B. from PIONIER use cases).

2.3 Data sources

2.3.1 ESO instruments

2.3.1.1 PIONIER

2.3.1.3 MIDI

2.3.2 CHARA instruments

2.3.2.1 VEGA

On going.

L0 is almost complete ?

L3 bibcodes would be very handy to have.

See

OiDbVega for a description of the current process.

2.3.2.2 CLIMB and CLASSIC

On going.

See

OiDbChara for a description of the current process.

2.3.2.3 FLUOR

Collaboration on going with Vincent Coudé du Foresto.

How to synchronize their L0 data (google document) with OIDB ?

2.3.2.4 MIRC

2.3.2.5 PAVO

2.3.3 NPOI (CLASSIC and VISION)

Collaboration on going with Anders Christensen

2.3.4 Decommissioned instruments (PTI, IOTA)

2.3.5 Preparation for future instruments (GRAVITY, MATISSE)

2.3.6 Others

SUSI, Aperture masking, et

3. User Portal

3.1 User authentification

User authentification is necessary for data submission. Access to the submission form and submission endpoints are controlled by the existing JMMC authentication service.

The name, email and affiliation can help pre-filling submission form of new data.

This authentication may also be used as control access (authorization/restriction) to specific curated data under the responsability of their dataPIs.

3.2 Data access

3.2.1 Web portal

The project provides a Web front end for data submission and data consultation.

3.2.1.1 Submit interface

Forms are provided for injection of data depending on calibration level and data source. OIFits files can be uploaded with additionnal metadata that can not be derived from its contents.

3.2.1.2 Search interface

The data from the application are displayed in tables with a default set of columns. User can select which columns to show.

Each observation is rendered as a row in the table and may be link to internal or external resource (see

OiDbImplementationsNotes).

The page also proposes a set of filters to build custom requests on the dataset. These filters can be combined to refine the search.

The page also show the availability status of the data.

A user can use the search function of the application without being identified. His search history can be persisted through sessions.

3.2.1.3 Plots

UV plots, Visibility as a function of baseline...

3.2.1.4 Statistics

Basic user interactions are collected as usage statistics : number of download for an observation or a collection, queries.

How to deal with several downloads of the same archive from the same user ? Is user identification mandatory ?

3.2.1.5 Archive life cycle

Ideally there should be a link from the L0 metadata to the L3 data. One element that could allow this tracking is the run ID that are specific to each observatories/instruments (see ticket 554).

3.2.1.6 Information Tables

There is a need to have an instrument table that summarizes their different modes, resolutions, PI names, websites, etc.

3.2.1.7 Graphic layout

Cosmetics, not high priority.

4. Backoffice

Here are the main reasons why we need a backoffice:

- check for duplicates

- update documentation

- manage L0 metadata importation

- suppress/modify granules

- check connectivity with service (TAP)

- monitor downloads

4.2 Functions

4.2.1 L0 Functions

The backoffice allows to manually import the whole Vega database (update Vega logs button) in order to update it. It will soon be available for CHARA CLIMB and CLASSIC.

4.2.2 L1/L2 Functions

automatic, individual

4.2.3 L3 Functions

There should be a function to trigger the search for duplicates and their subsequent treatment for those that passed the submission step (is it the case for L2 automatic) ?

Vizier, individual,

4.2.4 Service Functions

4.2.5 Other Functions

4.2.5.1 Documentation

4.2.5.2 Comments

4.3 Rules

This section describes when

5. Environment

5.1 Virtual Observatory

The project will be published as a service in the Virtual Observatory as a data model supported by a TAP service.

See

Publishing Data into the VO

.

5.2 CDS

Link JMMC - OIDB. If a user makes available an online material(OIFITS) with a paper, it would be handy to have the catalog name automatically given to the oidb.

5.3 ESO database

Collaboration on going with I. Percheron and C. Hummel

6. Documentation

Technical documentation and user manual will be written along the development.

Intellectual property rights to be investigated. Online help for the users.

Notes

- DaCHS

- IVOA SSO

- IVOA ObsProvDM

The webapp accepts user requests from the form on the search page. Currently the search interface is limited to a set of pre-defined filters that are not combinable.

The Web portal makes requests to DSA/Catalog (a layer on top of existing database providing IVOA search capabilities) that serialize data to VOTable.

The webapp parses the response (XML VOTable) and formats data as HTML tables for rendering.

When submitting new data, the webapp directly builds and executes SQL queries on the database.

The webapp accepts user requests from the form on the search page. Currently the search interface is limited to a set of pre-defined filters that are not combinable.

The Web portal makes requests to DSA/Catalog (a layer on top of existing database providing IVOA search capabilities) that serialize data to VOTable.

The webapp parses the response (XML VOTable) and formats data as HTML tables for rendering.

When submitting new data, the webapp directly builds and executes SQL queries on the database.

). Most of the domain specific data have been mapped to this data model (see User requirements for an Optical interferometry database). Other fields still need a definition:

). Most of the domain specific data have been mapped to this data model (see User requirements for an Optical interferometry database). Other fields still need a definition:

below).

below).

). The data are associated with the specific publication and the author, optionnaly using other web services to access the information.

). The data are associated with the specific publication and the author, optionnaly using other web services to access the information.

.

.

The webapp accepts user requests from the form on the search page. Currently the search interface is limited to a set of pre-defined filters that are not combinable.

The Web portal makes requests to DSA/Catalog (a layer on top of existing database providing IVOA search capabilities) that serialize data to VOTable.

The webapp parses the response (XML VOTable) and formats data as HTML tables for rendering.

When submitting new data, the webapp directly builds and executes SQL queries on the database.

The webapp accepts user requests from the form on the search page. Currently the search interface is limited to a set of pre-defined filters that are not combinable.

The Web portal makes requests to DSA/Catalog (a layer on top of existing database providing IVOA search capabilities) that serialize data to VOTable.

The webapp parses the response (XML VOTable) and formats data as HTML tables for rendering.

When submitting new data, the webapp directly builds and executes SQL queries on the database.